Reading Euler's Introductio in Analysin Infinitorum

Noted historian of mathematics Carl Boyer called Euler's Introductio in Analysin Infinitorum "the foremost textbook of modern times"[1] (guess what is the foremost textbook of all times). Published in two volumes in 1748, the Introductio takes up polynomials and infinite series (Euler regarded the two as virtually synonymous), exponential and logarithmic functions, trigonometry, the zeta function and formulas involving primes, partitions, and continued fractions. That's Book I, and the list could continue; Book II concerns analytic geometry in two and three dimensions. The Introductio was written in Latin[2], like most of Euler's work. This article considers part of Book I and a small part. The Introductio has been massively influential from the day it was published and established the term "analysis" in its modern usage in mathematics. It is eminently readable today, in part because so many of the subjects touched on were fixed in stone from that day till this, Euler's notation, terminology, choice of subject, and way of thinking being adopted almost universally.

Both volumes have been translated into English by John D. Blanton as Introduction to Analysis of the Infinite[3]. Blanton starts his short introduction like this:

In October, 1979, Professor André Weil spoke at the University of Rochester on the Life and Works of Leonard Euler. One of his remarks was to the effect that he was trying to convince the mathematical community that our students of mathematics would profit much more from a study of Euler's Introductio in Analysin Infinitorum, rather than of the available modern textbooks.

The Introductio is an unusual mix of somewhat elementary matters, even for 1748, together with cutting-edge research. Euler went to great pains to lay out facts and to explain. Not always to prove either — he states at many points that a polynomial of degree n has exactly n real or complex roots with nary a proof in sight. He develops infinite series with blithe unconcern for convergence and gives a hand-waving proof of the intermediate value theorem (§33).

The point is not to quibble with the great one, but to highlight his unerring intuition in ferreting out and motivating important facts, putting them in proper context, connecting them with each other, and extending the breadth and depth of the foundation in an enduring way, ironclad proofs to follow. Large sections of mathematics for the next hundred years developed almost as a series of footnotes to Euler and this book in particular, researchers expanding his work, proving or re-proving his theorems, and firming up the foundation. Consider the estimate of Gauss, born soon before Euler's death (Euler 1707 - 1783, Gauss 1777 - 1855) and the most exacting of mathematicians:

... the study of Euler's works will remain the best for different fields of mathematics and nothing else can replace it.[5]

Book I is divided into 18 chapters and 381 numbered sections, so Chapter I contains §1 — §26, Chapter II continues at §27, and so on. The renowned formula named after Euler is found in §138 (e, the base of the natural logarithms, had been introduced earlier):

\[ e^{iv} = \cos{v} + i \sin{v}, \]

although he put \( \sqrt{-1} \) rather than \( i \) — Boyer says Euler was the first to use \( i \) for \( \sqrt{-1} \), but this came later (1968, p 484). §167 resolves the celebrated Basel problem by showing that:

\[ \zeta(2) = {\sum_{n=1}^\infty {1 \over n^2}} = { 1 + {1 \over 4} + {1 \over 9} + {1 \over 16} + {1 \over 25} + \cdots} = {\pi \over 6}. \]

The summation sign was Euler's idea[4], incidentally — "summam indicabimus signo \( \Sigma \)" he wrote in 1755 (we will indicate a sum by the sign \( \Sigma \)).

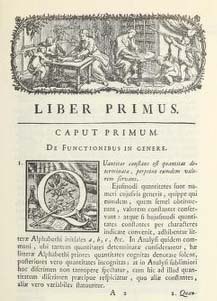

Chapter I, pictured here, is titled "De Functionibus in Genere" (On Functions in General) and the most cursory reading establishes that Euler's concept of a function is virtually identical to ours. Here is his definition on page 2:

A function of a variable quantity (functio quantitatis variabilis) is an analytic expression composed in any way whatsoever of the variable quantity and numbers or constant quantities.

Euler was not the first to use the term "function" — Leibnitz and Johann Bernoulli were using the word and groping towards the concept as early as 1673, but Euler broadened the definition (an analytic expression composed in any way whatsoever!) and put it at the center of analysis, the informing concept of the entire enterprise. He distinguishes between single-valued and multiple-valued functions like \( \sqrt{2z + z^2} \). He considers implicit as well as explicit functions and categorizes them as algebraic, transcendental, rational, and so on. The concept of an inverse function was second nature to him, the foundation for an extended treatment of logarithms. He called polynomials "integral functions" — the term didn't stick, but the interest in this kind of function did. You know you're in for a ride when he mentions in passing early on that \( z^{\sqrt{2}} \) is not a transcendental function because \( \sqrt{2} \) is a constant.

Chapter II concerns writing a polynomial as a product of linear factors involving its roots and states unequivocally that "a polynomial function of z in which the highest power of z is n will contain n linear factors (§28):

Hinc Functio ipsius z integra, in qua exponens summae potestatis ipsius z est = n, continebit n Factores simplices.

That is:

\[ {f(z)} = {z^n + a_{n-1}z^{n-1} + a_{n-2}z^{n-2} + \cdots + a_0} = {(z - \beta_0)}{(z - \beta_1)} \cdots {(z - \beta_{n-1})}, \]

where the \( a_i \) are real and the \( \beta_i \) complex (or imaginarii as he put it). He points out that the \( \beta_i \) are the roots — the values of \( z \) such that \( f(z) = 0 \). He says that complex factors come in pairs and that the product of two pairs is a quadratic polynomial with real coefficients; that the number of complex roots is even; that a polynomial of odd degree has at least one real root; and that if a real decomposition is wanted, then linear and quadratic factors are sufficient.

Then he pivots to partial fractions, taking up the better part of Chapter II. Maybe he's setting up for integrating fractions of polynomials, that's where the subject came up in my education (and the only place). Calculus proper is nowhere to be found, incidentally, though infinite processes are woven in throughout — infinite sums and products, limiting processes, and free use of the symbol \( \infty \) as if it were just another number. In §40, he works out a decomposition by assuming there is one with the denominators on the right and solving for the constant numerators (\( A = 1, B = 1, C = -1 \)):

\[ {{1 + z^2} \over {z - z^3}} = {{1 \over z} + {1 \over {1 - z}} - {1 \over {1 + z}}}. \]

That's the thing about Euler, he took exposition, teaching, and example seriously. He was prodigiously productive; his Opera Omnia is seventy volumes or something, taking up a shelf top to bottom at my college library. Like the phenomenally prolific Paul Erdős in modern times, Euler must have depended on high caliber assistants and devoted publishers to turn his ideas and furiously scratched pages into published works (when blind later in life, he dictated aloud).

Chapter IV is "On the Development of Functions in Infinite Series" and starts like this:

Since both rational functions and irrational functions of z are not of the form of polynomials \( A + Bz + Cz^2 + Dz^3 + \cdots \), where the number of terms is finite, we are accustomed to seek expressions of this type with an infinite number of terms which give the value of the rational or irrational function. Even the nature of the transcendental functions seems to be better understood when it is expressed in this form, even though it is an infinite expression. Since the nature of polynomial functions is very well understood, if other functions can be expressed by different powers of z in such a way that they are put in the form \( A + Bz + Cz^2 + Dz^3 + \cdots \), then they seem to be in the best form for the mind to grasp their nature, even though the number of terms is infinite.

In the case of quotients of polynomials, his method is to assume an infinite series expansion, cross multiply, then equate coefficients for the respective powers (there are an infinite number of them). In §61, for example, he wants to solve for A, B, C and the rest in terms of the coefficients of the original function on the left:

\[ {{a + bz} \over {\alpha + \beta z + \gamma z^2}} = {A + Bz + Cz^2 + Dz^3 + Ez^4 + \cdots}. \]

He works it out in all generality, then applies the result to:

\[ f(z) = {{1 + 2z} \over {1 - z - z^2}}. \]

Let's go right to that example and apply Euler's method. We want to find A, B, C and so on such that:

\[ {{1 + 2z} \over {1 - z - z^2}} = {A + Bz + Cz^2 + Dz^3 + Ez^4 + \cdots}. \]

Cross multiply, expanding the right side and collecting terms:

\[ {1 + 2z} = {(1 - z - z^2)(A + Bz + Cz^2 + Dz^3 + \cdots}) \]

\[ \hspace{-30pt} = {A + Bz + Cz^2 + Dz^3 + \cdots} \]

\[ \hspace{-10pt} -Az -Bz^2 - Cz^3 + \cdots \]

\[ \hspace{15pt} -Az^2 -Bz^3 + \cdots \]

\[ \hspace{70pt} = A + (B - A)z + (C - B - A) z^2 + (D - C - B)z^3 + \cdots, \]

so by equating the coefficients in the first two columns (units and first power of z):

\[ A = 1, \hspace{10pt} {B - A} = 2 \Rightarrow B = 3. \]

There is no \( z^2 \) on the left, so the coefficient of \( z^2 \) on the right is \( 0 \); that is, \( C−B−A = 0 \) or \( C = B + A \). Similarly \( D = C + B \) and in fact each following coefficient equals the sum of the two before it:

\[ \small{f(z) = {{1 + 2z} \over {1 - z - z^2}} = {1 + 3z + 4z^2 + 7z^3 + 11 z^4 + 18z^5 + \cdots} = {\sum_{n=0}^\infty a_n z^n}, a_0 = 1, a_1 = 3, a_{n+2} = a_{n+1} + a_n.} \]

That's a Fibonacci-like sequence known as the Lucas series, for which:

\[ {a_{n+1} \over a_n} \rightarrow \phi = {{\sqrt{5} + 1} \over 2} \text{ as } n \rightarrow \infty. \]

The proof is similar to that for the Fibonacci numbers. By the ratio test, the series converges for \( {\lvert z \rvert} < 1/\phi = {\phi - 1} = 0.618 \), not that Euler addressed convergence. I learned the ratio test long ago, but not Euler's method, and the poorer for it.

Euler's treatment of exponential and logarithmic functions is indistinguishable from what algebra students learn today, though a close reader can sense that logs were of more than theoretical interest in those days. Briggs's and Vlacq's ten-place log tables revolutionized calculating and provided bedrock support for practical calculators for over three hundred years. How quickly we forget, beneficiaries of electronic calculators and computers for fifty years. In 1600, taking a tenth root to any precision might take hours for a practiced calculator. By 1650, log tables at hand, seconds. Here is §102 in its entirety:

Introductio in Analysin Infinitorum, §102

Just as, given a number \( a \), for any value of \( z \), we can find the value of \( y \), so, in turn, given a positive value for \( y \), we would like to give a value for \( z \), such that \( a^z = y \). This value of \( z \), insofar as it is viewed as a function of \( y \), is called the LOGARITHM of \( y \). The discussion of logarithms supposes that there is some fixed constant to be substituted for \( a \), and this number is the base for the logarithm. Having assumed this base, we say the logarithm of \( y \) is the exponent in the power \( a^z \) such that \( a^z = y \). It has been customary to designate the logarithm of \( y \) by the symbol \( \log y \). If \( a^z = y \), then \( z = \log y \). From this we understand that the base of the logarithms, although it depends on our choice, still should be a number greater than 1. Furthermore, it is only of positive numbers that we can represent the logarithm with a real number.

For \( z = \log y \), Euler writes \( z = ly \), but the idea and hyper-modern presentation are there: \( \log{vy} = {\log v + \log y} \); \( \log{y^r} = r \log y \) for any real number r; the change of basis rule; \( \log 1 = 0 \); that \( \log \) is an increasing function if \( a > 1 \); that the base \( a \) can be any positive number, but you might as well stick to \( a > 1 \) because if \( 0 < a < 1 \), you can take its reciprocal as the base without loss of generality. He points out that for base \( a > 1 \), \( \log z \rightarrow \infty \text{ as } z \rightarrow \infty \) and \( \log z \rightarrow 0 \text{ as } z \rightarrow -\infty \).

In §106, Euler calculates \( log_{10} 5 \) using binary fractions:

\[ 1 < \hspace{1pt} 10^{1/8} < \hspace{1pt} 10^{1/4} < \hspace{1pt} 10^{3/8} < \hspace{1pt} 10^{1/2} < \hspace{1pt} 10^{5/8} < \hspace{1pt} 10^{3/4} < \hspace{1pt} 10^{7/8} \hspace{0pt} < 10 \] \[ 1 < 1.334 < 1.778 < 2.371 < 3.162 < 4.217 < 5.623 < 7.499 < 10 \]

The second row gives the decimal equivalents for clarity, not that a would-be calculator knows them in advance. The idea is that since \( 10^{5/8} < 5 < 10^{3/4} \), \( 5/8 < {\log_{10} 5} < 3/4 \), and an iterative algorithm is used to drill in as far as desired. The calculation is based on observing that the next two lines imply the third:

\[ z = \log y \Rightarrow 10^z = y \Rightarrow 10^{z/2} = y^{1/2}. \] \[ x = \log v \Rightarrow 10^x = v \Rightarrow 10^{x/2} = v^{1/2}. \] \[ \therefore 10^{{(x + z)} / 2} = {(yv)}^{1/2}, \text{ so } {{x + z} \over 2} = \log \sqrt{vy}. \]

The iterative process starts with \(v_n = 1, z_n = 10 \) and results in \( \log r_0 = \log \sqrt{10} = 1/2 \) — this is the top row below:

\[ \begin{array}{c|cc|cc|cc} \hskip{2pt} n \hskip{2pt} & \hskip{5pt} v_n \hskip{5pt} & \hskip{5pt} z_n \hskip{3pt} & \hskip{3pt} y_n = \log v_n \hskip{2pt} & \hskip{2pt} x_n = \log z_n \hskip{3pt} & \hskip{3pt} r_n = \sqrt{v_n z_n} \hskip{3pt} & \hskip{3pt} \log r_n = \small{{(x_n + y_n)}/2} \hskip{3pt} \\ \hline 0 & 1 & 10 & 0 & 1 & 3.162278 & \small{1/2} \\ 1 & 3.162278 & 10 & \small{1/2} & 1 & 5.623413 & \small{3/4} \\ 2 & 3.162278 & 5.623413 & \small{1/2} & \small{3/4} & 4.216965 & \small{5/8} \\ 3 & 4.216965 & 5.623413 & \small{5/8} & \small{3/4} & 4.869675 & \small{11/16} \end{array} \]

\( r_n \) approaches 5 from above and below ever more closely as the iteration proceeds, where \( \log r_n \) is a binary fraction with denominator doubling at each step. When \( n = 2 \), for example, \( r_2 = 4.216965 < 5 \), so at the next step, \( v_3 = r_2 \) and \( z_3 \) is the first \( r_k \) on the list greater than 5 working up the column. At each step \( (v_n, z_n) \) brackets 5, and more closely than the previous step. Note that the bottom row above approximates \( {\log_{10} 5} = 0.6989700 \) by \( {11 / 16} = 0.6875 \). Exemplifying his prodigious powers as a calculator, Euler carries this forward for 22 steps, producing the approximation \( {log_{10}} 5 = 0.6989700 \), which is correct to 7 places.

This is essentially an exercise is square root taking, where \( r_2 = 4.216965 = {\sqrt{\sqrt{10} \cdot \sqrt{10 \sqrt{10}}}} \), for example. Euler says that Briggs and Vlacq calculated their log table using this algorithm, but that methods in his day were improved (keep in mind that Euler was writing 125 years after Briggs and Vlacq). This isn't as daunting as it might seem, considering that the Newton-Raphson method of calculating square roots was well known by the time of Briggs — it was stated explicitly by Hero of Alexandria around the time of Christ and was quite possibly known to the ancient Babylonians. Also that it converges rapidly: with first guess 3, the method gives \( \sqrt{10} \) to eight places in three iterations.

In §110 and §111 Euler works out some problems of exponential growth identical in type to ones I've assigned in algebra classes. He addresses human population growth, showing that an annual increase of \( 1/144 \) leads to a doubling every century, explaining charmingly that "it is quite ridiculous for the incredulous to object that in such a short space of time the whole earth could not be populated beginning with a single man." (Adam!) He does an amortization calculation for a loan "at the usurious rate of five percent annual interest", calculating that a paydown of 25,000 florins per year on a 400,000 florin loan leads to a 33 year term, rather amazingly tracking American practice in the late twentieth century with our thirty year home mortgages.

Chapter VII is titled "Exponentials and Logarithms Expressed through Series" and starts by saying that for any base \( a \), there is a constant \( k \) depending on \( a \) such that \( a^\omega = {1 + k \omega} \) for small \( \omega \). True in the limit only, of course, but it makes sense because he is approximating \( a^\omega \) by its tangent line at \( x = 0 \). He works out an example with \( a = 10 \), taking \( \omega = 10^{-6} \), and shows that \( k_{10} = 2.30258 \), not so coincidentally equal to \( ln 10 \). After a bit this appears, §122:

Introductio in Analysin Infinitorum, §122

Since we are free to choose the base \( a \) for the system of logarithms, we now choose \( a \) in such a way that \( k = 1 \), then the series above found in §116, \( \large{1 + {1 \over 1} + {1 \over {1\cdot 2}} + {1 \over {1\cdot 2 \cdot 3}} + {1 \over {1\cdot 2 \cdot 3 \cdot 4}} + \cdots} \) is equal to \( a \). If the terms are represented as decimal fractions and summed, we obtain the value for \( a = 2.71828 1828 4590 4523 536028 \cdots \). When this base is chosen, the logarithms are called natural or hyperbolic. The latter name is used since the quadrature of a hyperbola can be expressed through these logarithms. For the sake of brevity for this number \( 2.718281828459 \cdots \) we will use the symbol \( e \), which will denote the base for the natural or hyperbolic logarithms, which corresponds to the value \( k = 1 \), and \( e \) represents the sum of the infinite series \( \large{1 + {1 \over 1} + {1 \over {1\cdot 2}} + {1 \over {1\cdot 2 \cdot 3}} + {1 \over {1\cdot 2 \cdot 3 \cdot 4}} + \cdots} \).

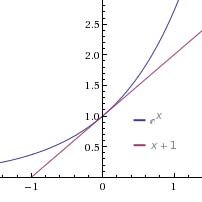

That's \( e \) to 23 places, all correct! Boyer says, "The concept behind this number had been well known ever since the invention of logarithms more than a century before; yet no standard notation for it had become common." (1968, p 484) Earlier in the chapter, Euler works out the derivation for any value of \( k \). Let's reprise his work for \( k = 1 \), when \( a^\omega \) is approximated by its tangent line \( y = 1 + \omega \) in the vicinity of \( 0 \). \( e \) is defined as the base for which this is true, namely:

\[ e^\omega = {1 + \omega}. \]

Let \( j \) be some large value, not necessarily an integer, and take the foregoing to the \( j^{th} \) power, the expansion following from the generalized binomial theorem:

\[ \begin{equation}{{e^{j \omega}} = {{(1 + \omega)}^j} = {1 + {{j \over 1} \omega} + {{{j(j - 1)} \over {1 \cdot 2}} \omega^2} + {{{j(j - 1)(j - 2)} \over {1 \cdot 2 \cdot 3}} \omega^3} + \cdots}.} \tag{1} \end{equation} \]

Let \(j = z/\omega \), so \( \omega = z/j \), and substitute in:

\begin{equation}{{e^z} = {{(1 + {z \over j})}^j} = {1 + {z \over 1} + {{{j(j - 1)} \over {1 \cdot 2}} {z^2 \over j^2}} + {{{j(j - 1)(j - 2)} \over {1 \cdot 2 \cdot 3}} {z^3 \over j^3}} + \cdots}} \tag{2} \end{equation}

But for large \( j \):

\[ \begin{equation}{{{j(j - 1)} \over j^2} \rightarrow 1, \hspace{6pt} {{j(j - 1)(j - 2)} \over j^3} \rightarrow 1, \text{ and so on.}} \tag{3} \end{equation} \]

Equating those limiting values and plugging into (2):

\[ \begin{equation}{e^z = {1 + {z \over 1} + {z^2 \over 2!} + {z^3 \over 3!} + \cdots}} \tag{4} \end{equation} \]

Put \( z = 1 \) to get the value for \( e \), used here for the first time to represent this constant and an early if not the first methodical explanation of it. Let's follow his development of a log series, again assuming \( k = 1 \), so we are dealing with natural logs. The left side of (1) implies that \( {j \omega} = {\log {(1 + \omega)}^j} \) and let \( {{(1 + \omega)}^j} = {1 + x} \). These two imply that:

\[ {1 + \omega} = {{(1 + x)}^{1/j}}, \text{ so } \omega = {{(1 + x)}^{1/j} - 1}. \]

\[ \begin{align*} \therefore {\log{(1 + x)}} &= {j \omega} = {j\left({(1 + x)}^{1/j} - 1\right)} \\ &= j{(1 + x)}^{1/j} - j \\ &= \left(j + {x \over 1} - {{x^2} \over 2} + {{x^3} \over 3} - {{x^4} \over 4} + \cdots \right) - j \\ &= {x \over 1} - {{x^2} \over 2} + {{x^3} \over 3} - {{x^4} \over 4} + \cdots \end{align*} \]

The expansion again follows from the binomial theorem and depends on cancelling quotients involving \( j \) like in (3), valid in the limit for large \( j \). Using \( -x \) for \( x \) in this formula leads to:

\[ {\log{(1 - x)}} = {- {x \over 1} - {{x^2} \over 2} - {{x^3} \over 3} - {{x^4} \over 4} + \cdots}. \]

Subtract these two expressions:

\[ \begin{equation}{\log{\left({{1 + x} \over {1 - x}}\right)} = {{2x \over 1} + {{2x^3} \over 3} + {{2x^5} \over 5} + \cdots},} \tag{5} \end{equation} \]

which is "vehemently convergent" for small \( x \) — "vehementer convergunt, si pro \( x \) flatuatur fractio valde parva", you've got to love the expressive language. He proceeds to calculate natural logs for the integers between 1 and 10. Putting \( x = 1/5 \) in (5), for example, results in:

\[ {\log{6 \over 4}} = {\log{3 \over 2}} = {2 \over {1 \cdot 5}} + {2 \over {3 \cdot 5^3}} + {2 \over {5 \cdot 5^5}} + {2 \over {7 \cdot 5^7}} + \cdots = 0.405465, \]

which is correct to six places, taking the first four terms as shown. In a similar vein, putting \( x = 1/7 \) leads to \( \log{4/3} \). But then:

\[ \log{3 \over 2} + \log{4 \over 3} = \log 2. \]

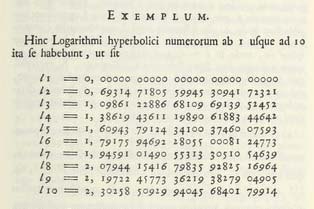

The natural logs of other small integers are calculated similarly, the only sticky one between 1 and 10 being 7. No problem! Put \( x = 1/99 \) in (5) to get \( \log{(100/98)} = \log{(50/49)} \), but \( \log 50 = {2 \log 5} + \log 2 \), so we have \( \log 49 \) and \( \log 7 = {(1/2)} \log 49 \). Voilà. "Hinc Logarithmi hyperbolici numerorum ab 1 usque ad 10 ita se habebunt", he writes (hence we have the hyperbolic logarithms of numbers from 1 to 10), and proceeds to give them to 25 places, as shown here.

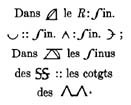

Trigonometry is an old subject (Ptolemy's chord table!) revived in Europe with the rest during the great awakening. Notation varied throughout the 17th and well into the 18th century. Cajori accounts for the bewildering variety of usages and it must have been confusing for all concerned (it is for me — see Volume II, §511 — §537). Euler himself experimented early in his career with \( \int \) for \( \sin \), probably just an elongated S favored by typesetters at that time. The image here is a sentence in Jean Bernoulli's suggested notation for spherical trigonometry (Cajori, §524) as late as 1776. Granted that spherical trig is a more complicated branch of the subject, it still illustrates the danger of entrusting notational decisions to one less brilliant than Euler. \( \sin \) and \( \cos \) had been used before Euler, mixed in with scores of other notations, but the relentless, systematic, and highly effective use in the Introductio seems to have made them canonical once and for all.

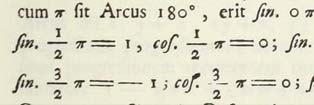

Here is a screen shot from the 1748 edition of the Introductio. The S's in \( sin \) and \( cos \) are elongated, but that was true for all S's throughout the work — note the sit ("is") on the first line with text right after the \( \pi \); it looks like fit, but it's not. Of course notation is always important, but the complex trigonometric formulas Euler needed in the Introductio would quickly become unintelligible without sensible contracted notation.

Euler uses arcs (radians) rather than angles as a matter of course. Chapter VIII on trigonometry is titled "On Transcendental Quantities which Arise from the Circle" and at its start he says let's assume the radius is 1 — second nature today, but not necessarily when he wrote and the gateway to the modern concept of sines and cosines as ratios rather than line segments. He approximates "half of the circumference of this circle" by a 127 digit value \( 3.141592 \cdots \) and says "for the sake of brevity we will use the symbol \( \pi \) for this number". My check shows that all digits are correct except the 113th, which should be 8 rather than the 7 he gives. This was the best value at the time and must have come from Thomas Fantet de Lagny's calculation in 1719. Boyer [6] says that Euler was not the first to use \( \pi \) in this way, but that "it was Euler's adoption of the symbol \( \pi \) in 1737, and later in his many popular textbooks, that made it widely known and used". (1968, p 484)

Euler notes in §132 that \( {\sin^2 z + \cos^2 z} = 1 \) and says let's factor it like this:

\[ {\sin^2 z + \cos^2 z} = {{(\cos z + i \sin z)}{(\cos z - i \sin z)}} = 1. \]

Imaginary but useful, he says — let's try this:

\[ {{(\cos y + i \sin y)}{(\cos z + i \sin z)}} = {\cos{(y + z)} + i \sin{(y + z)}}, \]

where the four terms resulting from multiplying out the left side are boiled down using the addition formulas for \( \sin \) and \( \cos \). Similarly:

\[ {{(\cos x + i \sin x)} {(\cos y + i \sin y)} {(\cos z + i \sin z)}} = {\cos{(x + y + z)} + i \sin{(x + y + z)}}, \]

and in general:

\[ \begin{equation}{{(\cos z + i \sin z)}^n = {\cos{nz} + i \sin{nz}}.} \tag{6} \end{equation} \]

At this point, you can almost hear the "Eureka!" reverberating down through the ages, seeing the power on the left and sums on the right like with exponentials. A tip of the hat to the old master, who does not cover his tracks, but takes you along the path he traveled. To my mind, that path is the one to understanding, truer and deeper than some latter day denatured and "elegant" generalized development with all motivation pressed right out of it. Euler pauses at this point to beat (6) into power series expansions for \( \sin \) and \( \cos \). There is another expression similar to (6), but with minus instead of plus signs, leading to:

\[ \begin{equation}{{\cos nz} = {{{(\cos z + i \sin z)}^n + {(\cos z - i \sin z)}^n} \over 2}.} \tag{7} \end{equation} \]

\[ \begin{equation}{{\sin nz} = {{{(\cos z + i \sin z)}^n - {(\cos z - i \sin z)}^n} \over 2i}.} \tag{7′} \end{equation} \]

Applying the binomial theorem to each of those expressions in (7) results in the following, since all the odd power terms cancel:

\[ {\cos nz} = { \cos^n z - {{n(n - 1)} \over {1 \cdot 2}} {\cos^{n-2} z} \cdot {sin^2 z} + {{n(n - 1)(n - 2)(n - 3)} \over {1 \cdot 2 \cdot 3 \cdot 4}} {\cos^{n-4} z} \cdot {sin^4 z}} + \cdots. \]

He continues:

Let the arc \( z \) be infinitely small, then \( \sin z = z \) and \( \cos z = 1 \). If \( n \) is an infinitely large number, so that \( nz \) is a finite number, say \( nz = v \), then, since \( \sin z = z = {v / n} \), we have: \[ \begin{equation}{{\cos v} = {1 - {v^2 \over {1 \cdot 2}} + {v^4 \over {1 \cdot 2 \cdot 3 \cdot 4}} - {v^6 \over {1 \cdot 2 \cdot 3 \cdot 4 \cdot 5 \cdot 6}} + \cdots}.} \tag{8} \end{equation} \]

The final step depends on \( {n-2} \sim {n-1} \sim n \) and so on for large n, so that, for example, \( {n{(n-1)} \sin^2 z} \sim z^2 \). He derives a similar expansion for \( \sin \):

\[ \begin{equation}{{\sin v} = {v - {v^3 \over {1 \cdot 2 \cdot 3}} + {v^5 \over {1 \cdot 2 \cdot 3 \cdot 4 \cdot 5}} - {v^7 \over {1 \cdot 2 \cdot 3 \cdot 4 \cdot 5 \cdot 6 \cdot 7}} + \cdots}.} \tag{9} \end{equation} \]

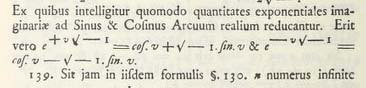

He shows how to calculate sines, cosines, and tangents, remarking that it suffices to calculate sines and cosines of angles less than 30° (he writes 30°, even though all calculations proceed with fractions of \( \pi \)). Now he's in a position to prove the theorem that will be known as Euler's formula until the end of time. Says he, "Ex quibus intelligitur quomodo quantitates exponentiales imaginariae ad Sinus & Cosinus Arcuum realium reducantur. Erit vero" — It follows how the sine and cosine of real arcs can be reduced to imaginary exponential quantities. In particular:

\[ \begin{equation}{ e^{iv} = {\cos{v} + i \sin{v}} \hspace{15pt} \& \hspace{15pt} e^{-iv} = {\cos{v} - i \sin{v}} .} \tag{10} \end{equation} \]

One approach would be to put \( iv \) for the argument in the power series expansions now available for the three functions in (4), (8), and (9) — the proof falls in your lap. But Euler returns to his earlier formulas (7) for \( \cos nz \) and \( \sin nz \), letting \( z \) be small and \( j = n \) large so that their product \( v = nz = jz \) is finite (he actually says "infinitely small" and "infinitely large"). Therefore \( {\sin z} = {\sin{(v/j)} = 1} \) and \( {\cos z} = 1 \). The last two are true only in the limit, of course, but let's think like Euler. Substituting into (7) and (7'):

\[ {\cos v} = {{{(1 + iv/j)}^j + {(1 - iv/j)}^j} \over 2}, \hspace{20pt} {\sin v} = {{{(1 + iv/j)}^j - {(1 - iv/j)}^j} \over 2i}. \]

We are talking about limits here (and were when manipulating power series expansions as well), so those four expressions in the numerators can be replaced by exponentials, as developed earlier:

\[ \begin{equation}{{\cos v} = {{e^{iv} + e^{-iv}} \over 2}, \hspace{20pt} {\sin v} = {{e^{iv} - e^{-iv}} \over 2i}.} \tag{11} \end{equation} \]

Euler's formula (10) follows immediately by adding the first equation in (11) to \( i \) times the second one.

André Weil writes[7]:

One of Euler's most sensational early discoveries, perhaps the one which established his growing reputation most firmly, was his summation of the series \( \sum_{n=1}^\infty n^{-2} \) and more generally of \( \sum_{n=1}^\infty n^{-2 \nu} \), i.e. in modern notation \( \zeta(2 \nu) \), for all positive even integers \( 2 \nu \). This was a famous problem, first formulated by P. Mengoli in 1650; it had resisted the efforts of all earlier analysts, including Leibniz and the Bernoullis. \( \cdots \)

What Euler found in 1735 is that \( \zeta(2) = \pi^2/6 \), and more generally, for \( \nu \geq 1\), \( {\zeta(2 \nu)} = {r_{\nu} \pi^{2 \nu}} \), where the \( r_{\nu} \) are rational numbers which eventually turned out to be closely related to the Bernoulli numbers. At first Euler obtained the value of \( \zeta(2) \), and at least the next few values of \( \zeta(2 \nu) \), by a somewhat reckless application of Newton's algebraic results, on the sums of powers of the roots for an equation of finite degree, to transcendental equations of the type \( {1 - \sin{(x/a)}} = 0 \). With this procedure he was treading on thin ice, and of course he knew it (p 184).

Euler was 28 when he first proved this result. Weil notes that his good instincts were fortified by prodigious calculations of these sums for small \( \nu \), calculations he seemed to enjoy for their own sake (p 261). Also that "for the next ten years, Euler never relaxed his efforts to put his conclusions on a sound basis" (p 265). By 1744, when the Introductio went into manuscript, he was able to include "a full account of the matter, entirely satisfactory by his standards, and even, in substance, by our more demanding ones" (Weil, p 185). Euler proceeds as follows in §156 and §167. From the earlier exponential work:

\[ \begin{equation}{{e^x - e^{-x}} = {{\left(1 + {x \over j}\right)}^j - {\left(1 - {x \over j}\right)}^j} = {2 \left( {x \over 1} + {x^3 \over 3!} + {x^5 \over 5!} + \cdots \right)}.} \tag{12} \end{equation} \]

He had spent some time earlier solving for all the roots of \(z^n - a^n \) — essentially all the complex roots of 1 around the unit circle. He talks of the quadratic factors of \( z^n - a^n \), which come in pairs as \( a^2 - 2az \cos{2k\pi/n} + z^2 \), where \( k = 0, 1, 2 \cdots \) and finite in number because they start to repeat after a while. Putting \( n = j, a = {1 + x/j}, \text{ and } z = {1 - x/j} \), it follows that the factors of (12) are:

\[ {\left(1 + {x \over j}\right)}^2 - 2 {\left(1 + {x \over j}\right)} {\left(1 - {x \over j}\right)} \cos{\left({2k\pi \over j}\right)} + {\left(1 - {x \over j}\right)}^2, \hspace{10pt} k=0,1,2 \cdots. \]

Expanding the products and collecting terms, the factors are:

\[ 2 + 2 {x^2 \over j^2} - 2{\left(1 - {x^2 \over j^2}\right)}{\cos\left({{2k\pi} \over j}\right)}. \]

Using the approximation \( \cos{v} = {1 - v^2/2} \) from (8), the factors are:

\[ 2 + 2 {x^2 \over j^2} - 2\left(1 - {x^2 \over j^2}\right) \left(1 - {{2 k^2 \pi^2} \over j^2}\right). \]

Multiplying out and collecting like terms gives:

\[ {{4 x^2} \over j^2} + {{4 k^2 \pi^2} \over j^2} - {{4 k^2 \pi^2 x^2} \over j^4}, \]

which becomes, after multiplying through by constant \( j^2 / {4k^2\pi^2} \):

\[ {x^2 \over {k^2 \pi^2}} + 1 - {x^2 \over j^2}. \]

The \( x^2 / j^2 \) term can be ignored, since "even when multiplied by \( j \), it remains infinitely small." So putting \( k = 1, 2, 3, \cdots \) and writing out the factors one after another leads to:

\[ \begin{align*}

{{e^x - e^{-x}} \over 2} &= {x \left( 1 + {x^2 \over 3!} + {x^4 \over 5!} + {x^6 \over 7!} + \cdots \right)} \\

&= x {\left(1 + {x^2 \over \pi^2}\right)}{\left(1 + {x^2 \over {4 \pi^2}}\right)}{\left(1 + {x^2 \over {9 \pi^2}}\right)} \cdots.

\end{align*} \]

Factoring out \( x \) and putting \( x^2 = \pi^2 z \) into the above results in:

\[ \begin{equation}{{1 + {\pi^2 \over 3!}z + {\pi^4 \over 5!}z^2 + \cdots} = {{\left(1 + z\right)}{\left(1 + {z \over 4}\right)}{\left(1 + {z \over 9}\right)} + \cdots}} \tag{13} \end{equation} \]

The coefficient of \( z \) on the left side of (13) is \( \pi^2/3! = \pi^2/6 \), but the coefficient of \( z \) on the right side is \( \zeta(2) = {1 + 1/4 + 1/9 + \cdots} \)! To see this, think how you'd get \( z \) to the first power by multiplying out on the right — every factor has to contribute a 1 except one of them. So you'd take the coefficient of \( z \) in factor \( (1 + z) \), namely 1, and 1's from all the other factors. To that you add the coefficient of \( z \) in factor \( (1 + z/4) \), namely \( 1/4 \), and 1's from all the other factors. Continuing in this vein gives the result:

\[ \zeta(2) = {\sum_{n=1}^\infty {1 \over n^2}} = {1 + {1 \over 4} + {1 \over 9} + {1 \over 16} + {1 \over 25} + \cdots} = {\pi^2 \over 6}. \]

Truly amazing and if this isn't art, then I've never seen it. But not done yet. Let \( A = \pi^2/6, B = \pi^4/120 \cdots \) be the coefficients of the sum on the left of (13) and \( \alpha = 1, \beta = 1/4, \gamma = 1/9 \cdots \) the coefficients of \( z \) in the factors on the right. Put:

\[ \begin{align*}

P &= {\alpha + \beta + \gamma + \cdots} \\

Q &= {\alpha^2 + \beta^2 + \gamma^2 + \cdots} \\

R &= {\alpha^3 + \beta^3 + \gamma^3 + \cdots},

\end{align*} \]

and so on. Then \( P = A \), as just argued. But also \( Q = AP - 2B \). The master says, " The truth of these formulas is intuitively clear, but a rigorous proof will be given in the differential calculus". To show that the formula makes sense, consider that \( B \), the coefficient of \( z^2 \) in (13), can be associated with the coefficients on the right of (13) much like was done for the coefficient of \( z \), but now pairs of coefficients from the right are needed:

\[ B = \alpha \beta + \alpha \gamma + \beta \gamma + \cdots, \]

where every possible product of coefficients appears. So:

\[ AP = {(\alpha + \beta + \gamma + \cdots)}{(\alpha + \beta + \gamma + \cdots)} = {(\alpha^2 + \beta^2 + \gamma^2 + \cdots)} + 2B. \]

The idea is that when the left side is expanded, all the squares appear and all the cross-products twice (\( \alpha \beta \text { and } \beta \alpha \)). So as asserted above:

\[ \begin{equation}{Q = AP - 2B.} \tag{14} \end{equation} \]

But \( Q = \zeta(4) = {\sum_{n=1}^\infty {1/n^4}} \) and the values on the right of (14) are known, so:

\[ Q = \zeta(4) = {\sum_{n=1}^\infty {1 \over n^4}} = \left({\pi^2 \over 6}\right)^2 - 2{\pi^4 \over 120} = {\pi^4 \left({1 \over 36} - {1 \over 60}\right)} = {\pi^4 \over 90}. \]

There are formulas for \( C, D, E \) and so on in terms of values calculated earlier in the process. Euler produces the values of \( \zeta(2 \nu) \) up to \( \nu = 13 \). I'd forgotten that:

\[ \zeta(24) = {\sum_{n=1}^\infty {1 \over n^{24}}} = {1 + {1 \over 2^{24}} + {1 \over 3^{24}} + {1 \over 4^{24}} + {1 \over 5^{24}} + \cdots} = {{2^{22} \over 25!}{1181820455 \over 273}\pi^{24}}. \]

François Arago called Euler "Analysis Incarnate" and said that he could calculate without any apparent effort, "just as men breathe, as eagles sustain themselves in the air" (Boyer, 1968, p 482). His output, like his penetrating insight, is beyond understanding, over seventy volumes in the Opera Omnia and still coming. He established notations and laid down foundations enduring to this day and taught in high school and college virtually unchanged. The foregoing is simply a sample from one of his works (an important one, granted) and would run four times as long were it to be a fair summary of Volume I, including enticing sections on prime formulas, partitions, and continued fractions. Volume II of the Introductio was equally path-breaking in analytic geometry. No wonder his contemporaries and immediate successors were in awe of him

Mike Bertrand

November 15, 2014

^ 1. "The Foremost Textbook of Modern Times", by C. B. Boyer (The American Mathematical Monthly, Vol 58, No 4 — April 1951).

^ 2. Introductio in Analysin Infinitorum, by Leonhardo Eulero, Marcum-Michaelem Bosquet & Socios (Lausanne, 1748).

^ 3. Introduction to Analysis of the Infinite, Book I, by Leonard Euler, John D. Blanton, translator, Springer-Verlag (1988), ISBN 0-387-96824-5.

^ 4. A History of Mathematical Notations, Vol II, by Florian Cajori, The Open Court Company (1928). The summation sign was Euler's idea: §438.

^ 5. A Concise History of Mathematics (4th Revised Edition), by Dirk J. Struik, Dover (1st ed. 1948, 4th ed. 1987), ISBN 978-0-486-60255-4. the study of Euler's works: p 124.

^ 6. A History of Mathematics, by Carl B. Boyer, John Wiley & Sons (1968), ISBN 0-471-09374X.

^ 7. Number Theory: An Approach through History from Hammurapi to Legendre, by André Weil, Birkhäuser (1984),0-8176-3141-0.